What Does Flag Output Do in Google Flow?

As generative AI tools become increasingly embedded in business workflows, collaboration platforms, and automation systems, understanding how to manage and control AI-generated content is more important than ever. Google Flow, part of Google’s growing ecosystem of AI-powered tools, introduces a feature known as Flag Output. At first glance, it may seem like a small control mechanism, but in reality, it plays a significant role in quality assurance, compliance, and responsible AI usage. Whether you are a developer, a content moderator, or a business leader integrating AI into operations, knowing what Flag Output does can help you harness Google Flow more effectively.

TLDR: Flag Output in Google Flow is a control mechanism that identifies, marks, or routes AI-generated outputs that may require review, moderation, or special handling. It helps organizations manage risks, maintain compliance, and improve content reliability. By flagging potentially sensitive, biased, or policy-violating outputs, teams can intervene before content reaches end users. Ultimately, it acts as a safeguard for responsible and scalable AI deployment.

Contents

- 1 Understanding Google Flow in Context

- 2 What Does Flag Output Actually Do?

- 3 Why Flag Output Matters in AI Workflows

- 4 How Flag Output Works Behind the Scenes

- 5 Practical Use Cases of Flag Output

- 6 Flag Output vs. Blocking Output

- 7 Improving Governance and Auditability

- 8 Strengthening Human-in-the-Loop Systems

- 9 Designing Effective Flagging Rules

- 10 Limitations and Considerations

- 11 The Bigger Picture: Responsible AI at Scale

- 12 Conclusion

Understanding Google Flow in Context

Before diving deeply into Flag Output, it helps to understand what Google Flow is designed to do. Google Flow is built to orchestrate tasks, automate processes, and connect AI-driven components into structured workflows. It allows organizations to:

- Automate repetitive tasks

- Trigger conditional actions based on logic

- Integrate AI models into business processes

- Control how outputs are routed and handled

When AI models generate responses, summaries, classifications, or predictions inside a workflow, those outputs often move directly into downstream systems. Sometimes they populate a CRM. Other times they publish content, send emails, or trigger additional automations.

This is where control mechanisms become essential. Not every AI-generated output should automatically proceed unchecked.

What Does Flag Output Actually Do?

Flag Output introduces a layer of oversight within these automated pipelines. At its core, it marks a specific AI-generated result as needing attention. This flag can be triggered based on:

- Content moderation rules

- Confidence thresholds

- Custom logic conditions

- Policy violations

- Sensitive or regulated content patterns

Once flagged, the output does not necessarily get deleted. Instead, it can be rerouted, queued for review, logged for auditing, or prevented from triggering downstream automation steps.

Think of it as a traffic control signal inside an AI-powered workflow. Normally, outputs get a green light. If certain conditions are met, Flag Output flips that to yellow or red, requiring closer inspection.

Why Flag Output Matters in AI Workflows

AI systems are powerful, but they are not infallible. They can:

- Generate hallucinated information

- Produce biased or sensitive content

- Misinterpret ambiguous prompts

- Provide inaccurate summaries

In low-risk scenarios, minor inaccuracies might be acceptable. But in industries such as healthcare, finance, legal services, or customer communications, the cost of an unfiltered AI mistake can be significant.

Flag Output serves as a built-in mitigation strategy. Instead of relying solely on human reviewers to catch errors after publication, the system proactively detects risk conditions before damage occurs.

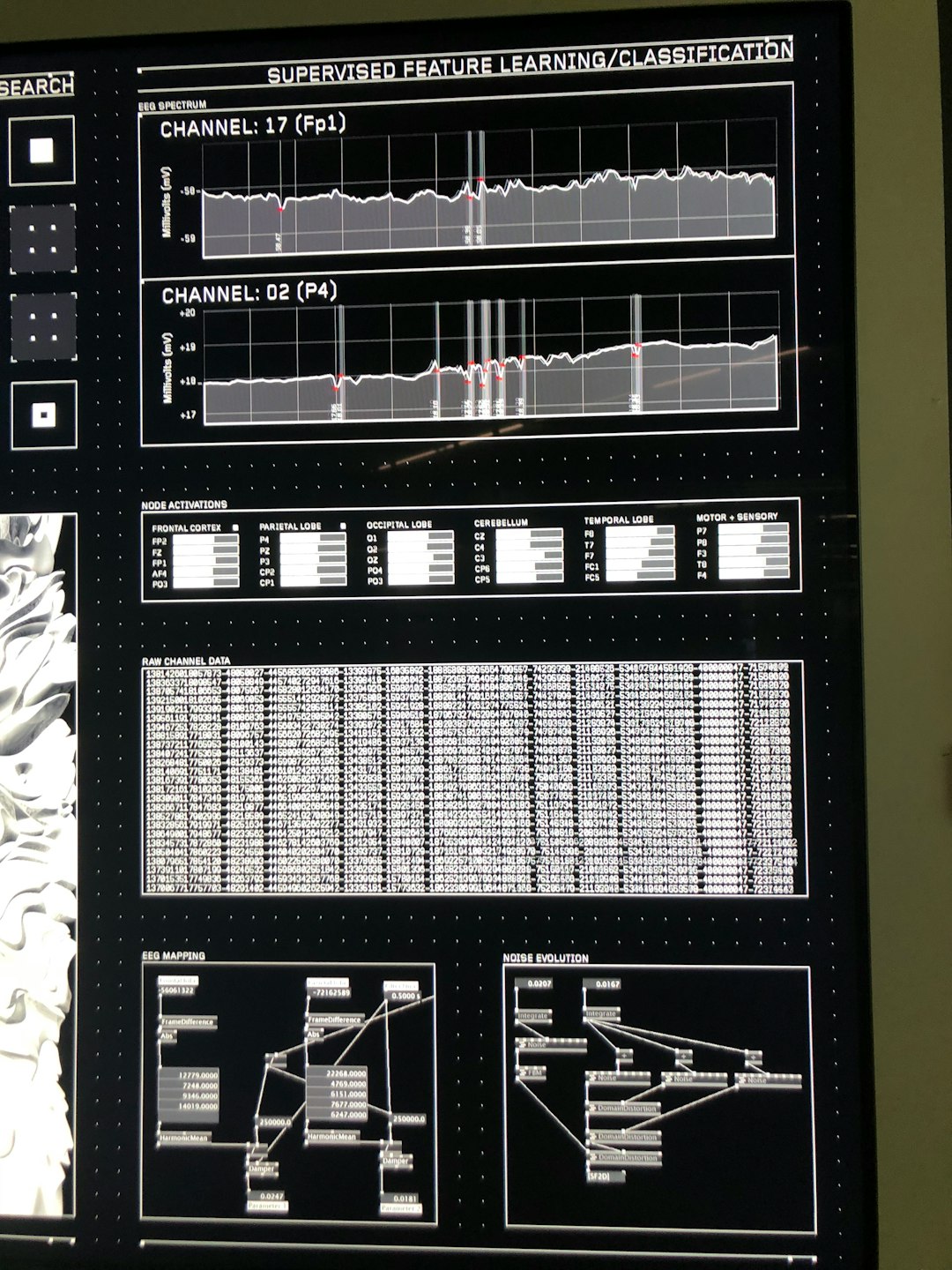

How Flag Output Works Behind the Scenes

Although implementation details may vary, Flag Output typically operates through rule-based or model-based evaluation criteria. Here’s a simplified view of the process:

- AI generates content or data.

- Evaluation logic runs immediately after generation.

- Predefined conditions are checked.

- If conditions are met, the output is marked with a flag.

- The workflow branches into an alternative handling path.

These conditions can include:

- Low confidence scores

- Presence of restricted keywords

- Sentiment thresholds

- Regulatory compliance triggers

Once flagged, outputs can follow alternative routes such as:

- Manual moderation queues

- Supervisor approval steps

- Additional AI review layers

- Automatic suppression

This branching logic transforms Google Flow from a basic automation tool into an intelligent oversight system.

Practical Use Cases of Flag Output

1. Content Moderation

Organizations generating large-scale AI content—such as product descriptions, chatbot responses, or emails—often use Flag Output to identify problematic language. If toxic or policy-violating content appears, the system flags it for human review.

2. Compliance in Regulated Industries

Financial institutions and healthcare providers must adhere to strict regulatory standards. Flag Output can detect:

- Unauthorized claims

- Sensitive personal data exposure

- Non-compliant phrasing

This ensures outputs are reviewed before reaching customers or public platforms.

3. Low Confidence Responses

Some workflows attach confidence scores to AI outputs. If a response falls below a defined threshold, Flag Output can redirect it for analysis instead of letting it proceed automatically.

4. Bias Detection and Ethical Safeguarding

Organizations committed to fairness can use flagging rules to detect potential gender, racial, or socio-economic bias patterns. When triggered, the output can undergo secondary human evaluation.

Flag Output vs. Blocking Output

It is important to distinguish between flagging and blocking.

- Flagging marks content for attention but preserves it for review.

- Blocking completely prevents delivery or storage.

Flagging offers a more nuanced approach. Instead of discarding potentially valuable information, it allows organizations to investigate context. Sometimes flagged content is entirely appropriate once a human sees the full picture.

This balance between automation and oversight is key to responsible AI deployment.

Improving Governance and Auditability

Modern enterprises must maintain detailed records of how AI systems behave. Flag Output supports this by creating structured checkpoints within workflows.

Benefits include:

- Clear audit trails

- Documented intervention points

- Reduced reputational risk

- Transparent compliance reporting

Flagged instances can be logged with metadata such as timestamp, trigger rule, and reviewer actions. Over time, this dataset becomes invaluable for refining both prompt engineering and filtering logic.

Strengthening Human-in-the-Loop Systems

AI works best when combined with human judgment. Flag Output enhances this synergy by selectively inserting humans into the loop only when required.

Instead of reviewing every AI output (which would eliminate efficiency gains), teams only review:

- Edge cases

- High-risk outputs

- Ambiguous interpretations

This selective oversight model maintains productivity while preserving quality.

Designing Effective Flagging Rules

The effectiveness of Flag Output depends heavily on thoughtful configuration. Poorly designed rules can result in:

- Over-flagging, creating bottlenecks

- Under-flagging, missing real risks

- False positives due to overly broad filters

Effective strategies include:

- Gradual threshold calibration

- Regular review of flagged cases

- Combining keyword filters with semantic analysis

- Iterative refinement based on real outcomes

As workflows mature, flagging mechanisms should evolve alongside changing regulatory environments and business expectations.

Limitations and Considerations

While powerful, Flag Output is not a standalone solution for AI governance. Limitations include:

- Dependence on predefined rules

- Potential blind spots in new edge cases

- Need for ongoing monitoring and tuning

Flagging works best when combined with broader strategies such as model evaluation, bias testing, continuous training updates, and cross-departmental oversight committees.

The Bigger Picture: Responsible AI at Scale

In many ways, Flag Output represents a broader movement in AI development: embedding responsibility directly into systems rather than relying exclusively on external review processes.

As AI moves from experimental use cases into mission-critical applications, organizations must build workflows that are not only efficient but also safe, transparent, and accountable. Flag Output helps meet this demand by providing structured intervention points within automated environments.

Instead of slowing innovation, flagging mechanisms enable it—because they create confidence. Teams are more willing to deploy AI at scale when guardrails are visibly in place.

Conclusion

Flag Output in Google Flow is far more than a simple tagging feature. It acts as a strategic checkpoint within AI-driven workflows, helping organizations maintain quality, manage risk, and support regulatory compliance. By identifying outputs that require additional attention, it ensures that automation does not outpace oversight.

As AI adoption accelerates, tools like Flag Output will become central to sustainable implementation strategies. They represent a practical acknowledgment that while AI can move fast, careful review and human judgment remain essential. When configured effectively, Flag Output transforms Google Flow into not just an automation engine—but a responsible, intelligent orchestration framework.